Last week I was at the ITS World Congress to talk a little about AI. Our panel was outstanding and brought up a wide range of transportation issues. I’ll highlight a few at the end, but I promised a high level summary of my slides this week and I’m here to deliver:

What do we mean by Intelligence?

AI is a new hot topic, but most folks are thinking in terms of the flashy new LLM models. They feel like something amazingly new, but under the hood, the algorithms have been growing for decades. What sets an AI apart from just a simple computer program?

Complexity: it’s trying to solve a problem that has lots of competing factors and much more than a yes or no answer.

Self-adjusting: the system needs to be responsive to a wide range of circumstances, adjusting its own parameters to deal with problems under the hood as they come up.

Probabilistic: intelligent systems recognize the world is not merely a set of levers that always generate the same outputs. Both the dice and the environment around them are chaotic, but fall into repeatable patterns. Fuzziness is a part of life and the system accounts for it.

Given these definitions, we have been using artificial intelligence in transportation for a very long time. Under the hood, almost all of our day to day tools—Synchro, HCS, Travel Demand Models—are essentially intelligent expert systems that combine deterministic choices and probability to generate probable outcomes. Most of them iterate to get to those solutions, which makes them far more self-adjusting than we realize. As these tools have grown, they have come to incorporate a wide range of machine learning algorithms.

How is AI getting used in Transportation?

Over the last 6 months, I’ve been asking transportation practitioners how they’re using AI. The answer is usually, “Not much,” but there were a few novel applications that are worth talking about.

Use case I: Paperwork. The Large Language Models (LLM’s) are great for summarizing and creating documentation. They need to be back-checked frequently, but they can save a bunch of time in what is usually a pretty tedious process.

Use Case Ia: Tone check. If you didn’t have autistic traits when you went into engineering school, you will by the time you get out it. That can leave engineers floundering when they deal with the public or with policy makers. One of my friends told me that they went back through their email correspondence with ChatGPT and asked it to check the tone of the conversation. Then asked for a strategy to address the underlying emotional issues in the conversation. This can be a huge blind spot for engineers, which makes this strategy absolutely brilliant.

Use Case II: AI Add-in’s. Another friend showed me how to use the new built-in tools within Excel to generate heat maps a lot faster than GIS ever could. A simple add-on dropped that process to a 5 minute affair, rather than a 5 hour slog. It’s nice to have something to look at before you forget what question you wanted to ask. There’s a wide range of new add-in’s in almost every piece of software. It just takes figuring out they’re there and how to use them.

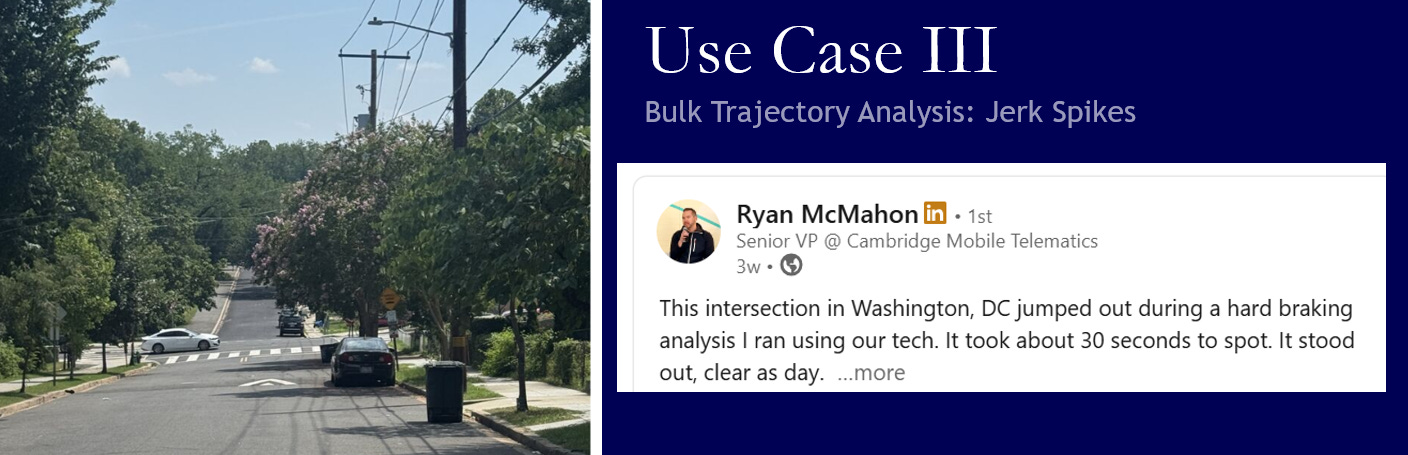

Use Case III: Jerk. I’ve known about this one for a while, but it was nice seeing someone actually use it in real life. A near miss nearly always includes hard braking or acceleration. If you can get the trajectory data and identify where jerk spikes happen frequently, you’ll find your crash hot spots—and maybe even be able to prevent a crash before it happens. Shoutout to Ryan McMahon for showing how it’s done. In this case, the tree had grown over the stop sign. They had to go look at it to figure out what to fix, but the AI analysis showed them where to look.

New Possibilities:

Here are a few new use cases that would have been unthinkable a few years ago.

Animating Plans. If we’re going to get good at serving vulnerable users, we need to be able to look up from the cross section. It’s one thing to create a plan. It’s another thing to move around in that place to see how it works. It should become a normal thing to take Streetview images of the area and update those images with a new plan and take it for a test walk. Would you let your 7 year old use it? Would you let your grandmother walk it? These need to be normal back-checks at the end of every street design. Here’s a video that the architect, Eric Valle, generated from a watercolor sketch.

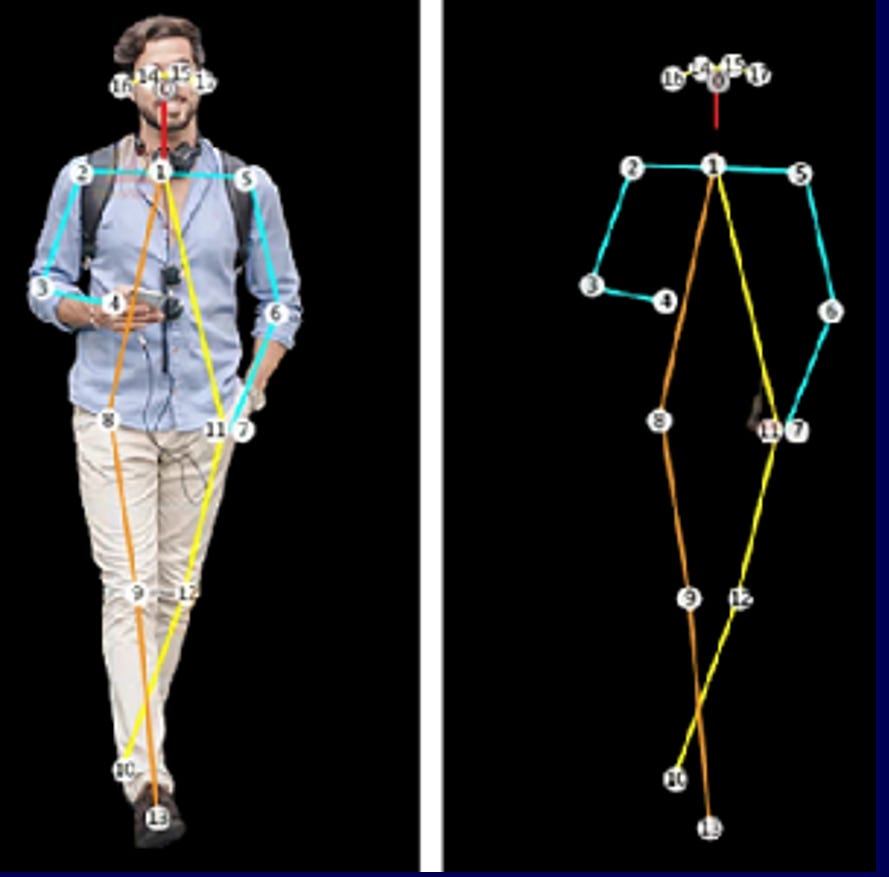

ML Vision Analysis: We use concrete measurements from our plans to identify safety issues, but drivers don’t read our plans. It’s easy for us to extract meaning from what we see with our eyes. It’s not as easy to translate what they’re seeing into variables that we can analyze.

For example, When you come up to an intersection, you can easily see whether someone is trying to cross the street or just hanging out. I’ve worked with folks like Dr. Zaki, who created algorithms that can identify whether someone is ready to cross from their posture.

The speed prediction formulas we created in the FDOT Mental Frameworks research used variables seen from the driver’s point of view. It’s easy to apply them in a single location, but to analyze those variables at a larger scale, you would need a ML algorithm to get those values at scale. Measuring doorway density means you need an algorithm that can see how many doors are there and which ones would be useful. The visual width of the corridor is how wide the corridor looks—easy to see, but not easy to measure, without AI.

LLM Text Analysis: One of the speakers in our session, Dr. Jidong Yang, did an analysis of crash reports by taking the data and creating a narrative from it. For some reason, the LLM was just a little bit better at analyzing the data when it was in a narrative form. I challenged him to include the police narrative as well. Someone needs to identify which crashes reflect “looked but didn’t see” crashes, and that will only be found in those narrative discussions. Once you can tag crashes that way, you could look at the types of places that get those crashes and begin to understand when drivers aren’t capable of seeing what we think they can.

AI Fallacies

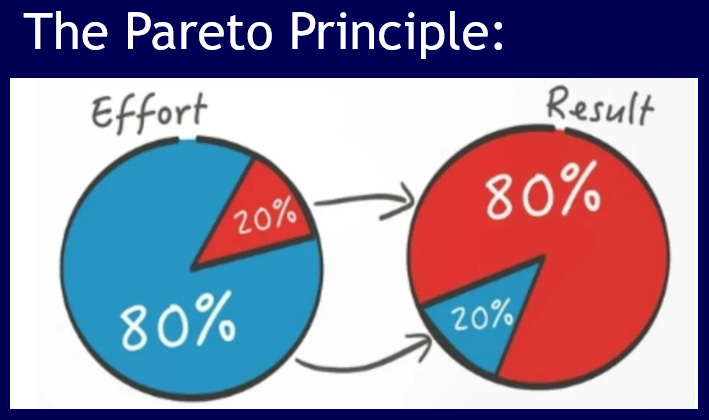

My biggest grief with AI is rooted in the Pareto Principle:

The first 20% of your effort will get you 80% of your results. Past that, you’re going to be spending a lot of time and effort and getting precious little for it. When you reach that point, it’s time to reexamine your strategy. If you change your approach, you can start a brand new 20% of effort, which will get you big results.

Here’s the question:

Is your AI solution working to get you that last 20% of results or is it a different strategy that can get you a new 80%?

It could be either.

There are times when AI is eking out a minor optimization. My friend, Pete Yauch, once asked me why I preferred approaching congestion as a plangineer instead of operations. My argument was that optimizing traffic flow could get me at most 20% more throughput. Land use changes could drop my trip lengths by 80%. Mode shift can get me even more. And those land use and mode shift changes would shift the way the community interacted with each other, giving me a place worth enjoying and all the economic benefits that came with it.

Later in the day, I met Jean-Ellen Willis from Dublin Ohio. They’ve already done the hard work at the land use and mode shift level. They have over 150 miles of multi-use paths. The network has a little better connectivity than most suburban networks, but not much. When they do on-demand transit, it functions: they get 10 minute response times. Because they put walking and biking as their first priority, everything else functions. They don’t need more than 5 lanes anywhere—and they don’t have many of those. They handle large events annually with only minor inconveniences.

What they were struggling with was pedestrian and bicycle safety. They used a suite of AI tools to optimize signals and meter the traffic volumes in their downtown area. It dropped their pedestrian crash rates by 75%. Would they have gotten the same results if they hadn’t already done the basics? Probably not.

AI risks and opportunities:

There were several neat things that popped out in our session. Seyhan Ucar from Toyota’s Vision Zero program talked about using trajectory data to identify distracted driving so that he could add a warning in the vehicle. Dr. Srinivas Peeta talked about cybersecurity threats within AI and the ability for both people and AI to identify when something was off or malicious in the automation. We need to be able to recognize and explain what the algorithms are doing as they do them, both to help them grow and to explain what happened when things go wrong. Angelos Amditis is the Chairman of ITS-Europe. He talked about the new European AI legislation and the issues with ethical applications. He also highlighted that hybrid intelligence—the partnership of human intelligence (HI) and AI—is utterly critical to any successful implementation. Figuring out how to identify the risks involved in each AI system, both at a system level and an individual level is key to an ethical approach to AI. That’s going to require open data standards, tight collaboration, and absolute transparency.

In my work with the disability community, I have found a new definition for success:

I can do it again.

We won’t learn how to fix mistakes that don’t get made, but as we make mistakes, some are easier to bounce back from than others. Vision Zero needs to be an absolute core tenet of any AI deployment. A system that creates irrecoverable failures will not be allowed to continue.

The truth is, more often than not, we’re trying to fix pain problems without truly understanding the underlying causes that created the problem. Symptom management can kill you if you are suffering from cancer. It’s important to keep asking “why” until we get to the root cause, even if that root feels like it’s out of our control. If you understand what the root causes are, you’ll have a better chance of applying an AI strategy that truly fixes things rather than perpetuating or enabling the problem at a deeper level.